Imagine a World of Robots

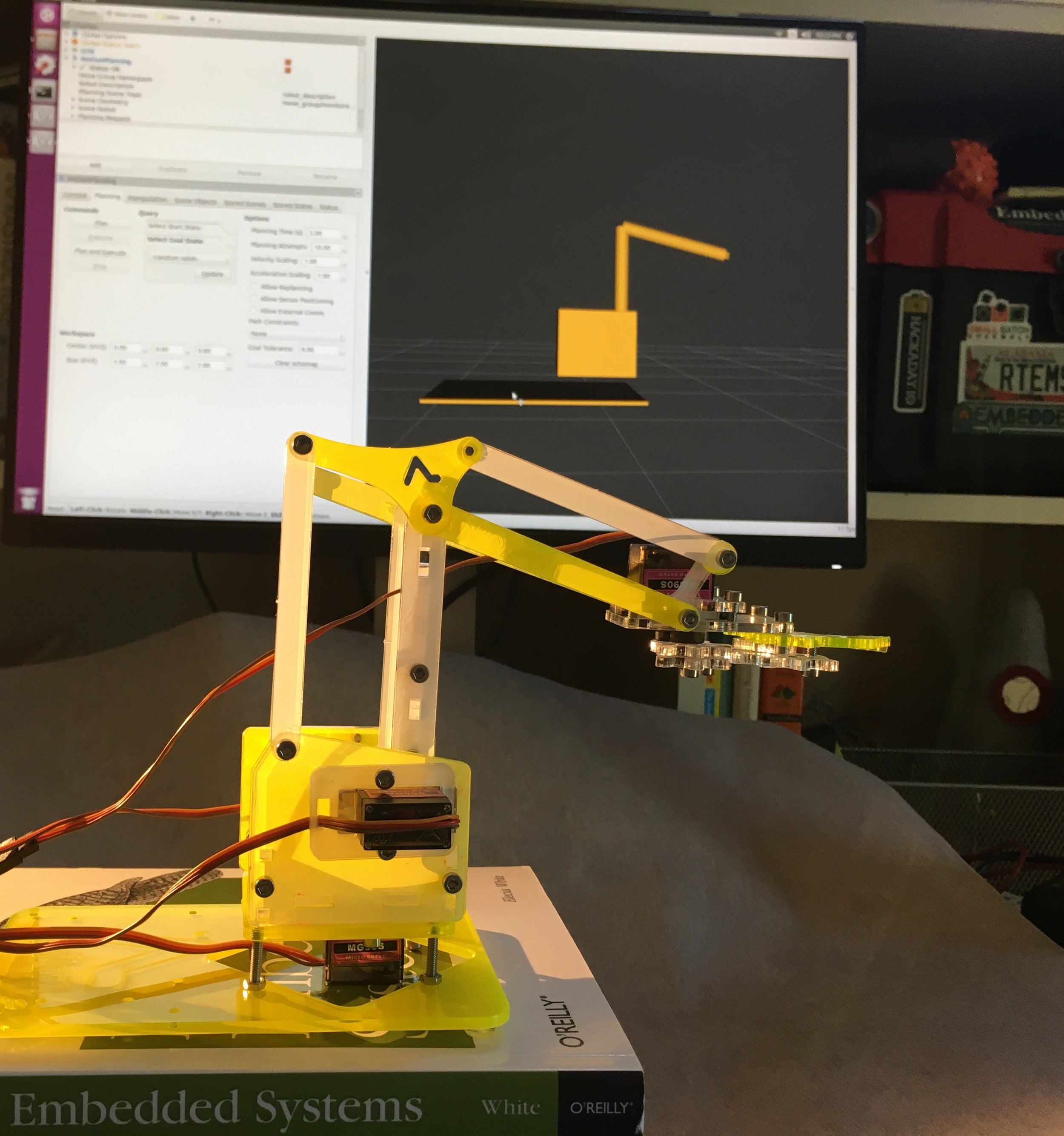

I have been working on a robot that I named Ty. It is a small arm that I control with an overpowered Jetson TX2, a board better suited to machine learning and virtual reality. I have learned a few things that I want to share with you.

Robotics is hard. The motor control is non-trivial. Figuring out how to move from where you are (given your joint states) to where you want to go (in XYZ, not even the same coordinate space) is demanding. Planning a whole sequence of motions while avoiding obstacles is some mind-bendingly difficult 3D trigonometry. Don’t even get me started on using a camera to figure out where you are or identify objects you want to touch. Also, the sensors always lie so don’t really trust them, instead build redundancies and figure out how to fuse the results to a representation of the real world. And if you actually want it to do things like type on a keyboard from voice control…

What if you could farm out all these parts to experts in these fields? What if you didn’t have to write it all yourself?

Re-inventing the Robot Operating System

Instead of telling you what I’ve learned about ROS (aka Robot Operating System), I suggest we mentally reinvent it. I think it will make more sense if we consider the whys before the hows.

Ok, let’s start with the idea that we can get lots of people who are experts in their field to do all the hard things for us. How do we get them to work together? We want them to be very loosely coupled. For example, a motion planner is that mind-bendingly difficult 3D trigonometry I mentioned earlier. It takes in current position data and outputs a series of positions for the robot controller to go to (these positions are called waypoints). Even though a motion planner needs to work with lots of different robot types and different motor configurations, we don’t want to build in a lot of dependencies between the parts. We don’t want the motion planner to care about the camera or the machine vision code, it should just take the goal location. Though it may need to know about obstacles it might collide with, so maybe a little more than the goal location… and then it would be useful to give it some feedback so it knew it the motors were drawing too much current, indicating an error. It would be easy to let this motion planner take over the whole system.

Sadly, when working on Ty, every piece I’ve worked on has this same feel: it only needs a little information except for all of the other information it also needs. Every module (every concept!) starts small and then spaghettis out. We want to reduce the spaghetti, ideally keep everything contained.

Essential Architecture

One of the first things we need to define is a way for all the pieces to talk to each other while hiding as much of their insides as possible. The camera module should squawk pictures, and the vision piece should listen for those pictures and squawk a region of interest. The motor sensors should squawk their position so the motion planner can use them to squawk a series of waypoints for the motor controller to attempt to meet. The motor controller will also listen to the current monitoring sensors so it knows how it is doing.

Thus, we have talkers and listeners, and anyone can be one or both. This is a common software thing, usually we call them publishers and subscribers. This very loosely connected methodology will let our experts solve the pieces of the robot problem without directly depending on one another: you feed me this input and I’ll spout that output.

A big part of the ROS is this publisher-subscriber model: the messages passed are published in topics. Of course you could do that yourself but ROS gives you tools to eavesdrop, inject messages, and map out who is talking to what. ROS’s core program’s only goal is helping the modules find each other.

Testing and Simulation

With all this message passing, most of the modules that don’t directly interface to the real world can be run and re-run based only on the data, making it much easier to test them. That’s the second piece to really consider: how are we going to test this? What if you don’t have as many robots as you do experts working on the pieces? What if your robot is very expensive? What if the hardware isn’t done yet but the software needs to make progress or we’ll lose funding?

Testing is important. And with this highly distributed system, we need to provide offline tests so each of our experts can be confident that their part of the system is working.

ROS provides extensive (really, really extensive) simulation resources. Ideally, you describe your robot and your environment then you get access to visualization, re-runnable tests, and simulation. RVIz is a physics engine that gives you great feedback about how the system should perform as well as a visual representation. Another program, Gazebo, gives you a way to simulate your robot in real world (ok, real world -ish) conditions. You can even create a simulated world for it and use a fake camera to drive your simulated robot around. And if you crash it into a wall, it is hilariously funny (less so in real life with shockingly expensive gear).

That’s sort of it. ROS is an architecture, some tools for that architecture, and some testing tools. It works on Ubuntu, and is written in C++ and Python.

Community

Oh, except many people have put their ROS code online. So you know those experts who we hoped would write some of our difficult parts? Yeah, they have done so. Now you have to find the code you want and see if you can understand their complex module well enough to use it.

I’ve been mentioning this motion planner as an example of something hard. I initially thought I could find a region of interest on my camera images and then use some math to translate that to joint angles on my motors. But my motors were being forced to make huge jumps, something that is bad for them. And I would run into problems where I needed to move one motor and then the other or they’d get into a bad position. Looking ahead, when I have two arms, they will be able to collide with each other, how to avoid that?

Someone did this for me. ROS has a motion planner called MoveIt! I can stop worrying about it, set a few parameters, describe my robot to it, and it moves. I can even get a visual representation of what the physics engine (Rviz) thinks my robot should do and compare it with the robot itself.

Of course, many other hard problems are solved. There are whole robots available for simulation. It is kind of amazing that you could work on interesting robotics problems without wondering if your robot’s battery is charged, chasing it down the hallway, or crashing it into a wall.

Conclusion

If you want to know more about ROS, I enjoyed O’Reilly’s Programming Robots with ROS. It was a little frustrating that it spent the early chapters on theory when you really want to get your hands dirty. But it more than makes up for it because, once you are playing with the simulated robots, it makes sense. If you just want to dip your toe in, look at the ROS Wiki, especially the robots part. Those tutorials will let you build and simulate those robots.

I liked ROS for the architecture implementation, the test-abilty through simulation, and the wealth of existing modules. Of course, the downside is that there is a lot of documentation to wade through and understand (and figure out what applies to you, is actually half-finished PhD thesis from three years ago, or has the normal open-source, somewhat-supported bugs that make it difficult to actually use).